- Published on

- View count

- 1567 views

Automate Your Job Search: Scraping 400+ LinkedIn Jobs with Python

The average job seeker spends 11 hours per week searching for jobs, according to LinkedIn. For tech roles, it's even worse, you're dealing with hundreds of postings across multiple platforms. When my partner started her job search, she was spending hours daily just scrolling through LinkedIn. There had to be a better way.

The Challenge

For Web developers, the market is overwhelming. A single search for "Frontend Developer" in London returned 401 results. Each posting requires:

- 5 seconds to review the title

- 3-4 clicks to view details

- 30-60 seconds to scan requirements

- Manual copy-pasting to track interesting roles

- Constant tab switching and back-navigation

For 401 jobs, that's hours of pure mechanical work!

The Solution: Automation Pipeline

I built a three-step automation pipeline that cut the process down to 10 minutes:

- Scrape job data using Python

- Filter in bulk using Google Sheets

- Review only the most promising matches

Step 1: Smart Scraping

I used JobSpy as the base and built JobsParser to handle:

- CLI

- Rate limiting (to avoid LinkedIn blocks)

- Retry logic for failed requests

Here's how to run it:

pip install jobsparserBonus: search manually on LinkedIn the number of results for your search term and use it for the --results-wanted parameter.

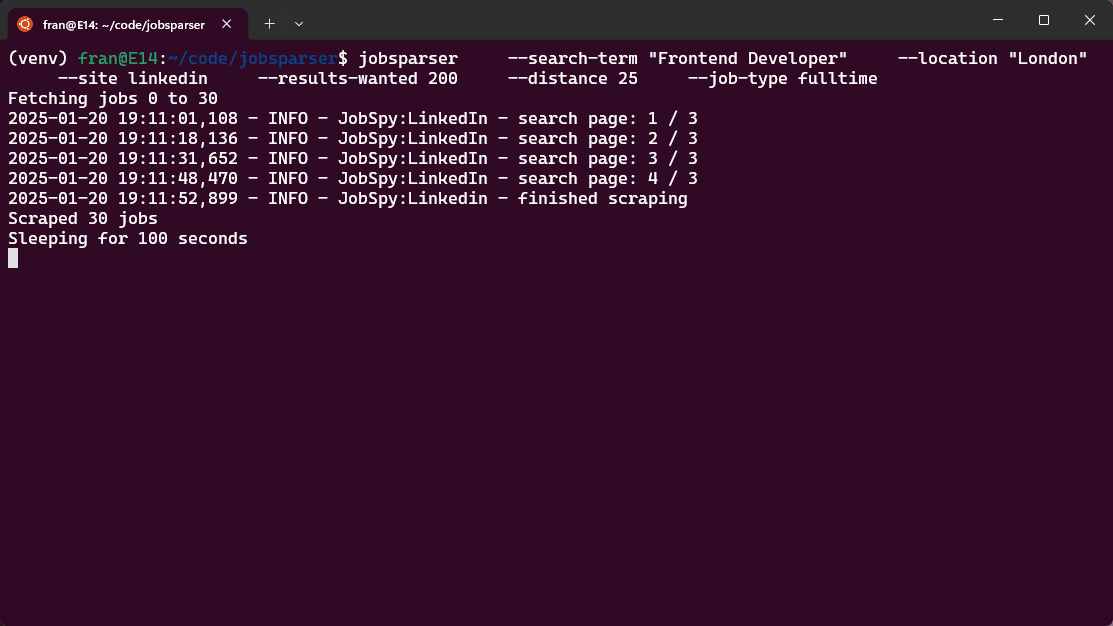

jobsparser \

--search-term "Frontend Developer" \

--location "London" \

--site linkedin \

--results-wanted 200 \

--distance 25 \

--job-type fulltimeIf jobsparser is not in your path, you can run it as a module directly:

python -m jobsparser \

--search-term "Frontend Developer" \

--location "London" \

--site linkedin \

--results-wanted 200 \

--distance 25 \

--job-type fulltimeThe output is a CSV with rich data in the data directory:

- Job title and company

- Full description

- Job type and level

- Posted date

- Direct application URL

JobSpy and JobsParser are also compatible with other job boards like LinkedIn, Indeed, Glassdoor, Google & ZipRecruiter.

Step 2: Bulk Filtering

While pandas seemed obvious (and I've given it a fair try), Google Sheets proved more flexible. Here's my filtering strategy:

Time Filter: Last 7 days

- Jobs older than a week have lower response rates

- Fresh postings mean active hiring

- Experience Filter: "job_level" matching your experience:

For my partner who is looking for her first role, I filtered:

- "Internship"

- "Entry Level"

- "Not Applicable"

- Tech Stack Filter: "description" contains:

- The word "React"

More complex filters can be created to check for multiple technologies.

This cut 401 jobs down to 8 matches!

Step 3: Smart Review

For the filtered jobs:

- Quick scan of title/company (10 seconds)

- Open promising

job_urlin new tab - Check the description in detail.

Conclusion

I hope this tool helps you make your job search a slightly more enjoyable experience.

If you have any questions or feedback, please let me know.